The way we view and utilize AI has changed dramatically over the past decade. Today, AI is being integrated into virtually every aspect of life, including business, health care, family life, transportation, and many others. AI has revolutionized the way we make decisions and engage with customers, even if we do not realize it. From increasing the use of automated decision-making systems (e.g., forecasting future consumption) and personalized products and services to generative AI and predictive analytics, the growing power of AI technologies will continue to make decisions about how we create, consume, and use information.

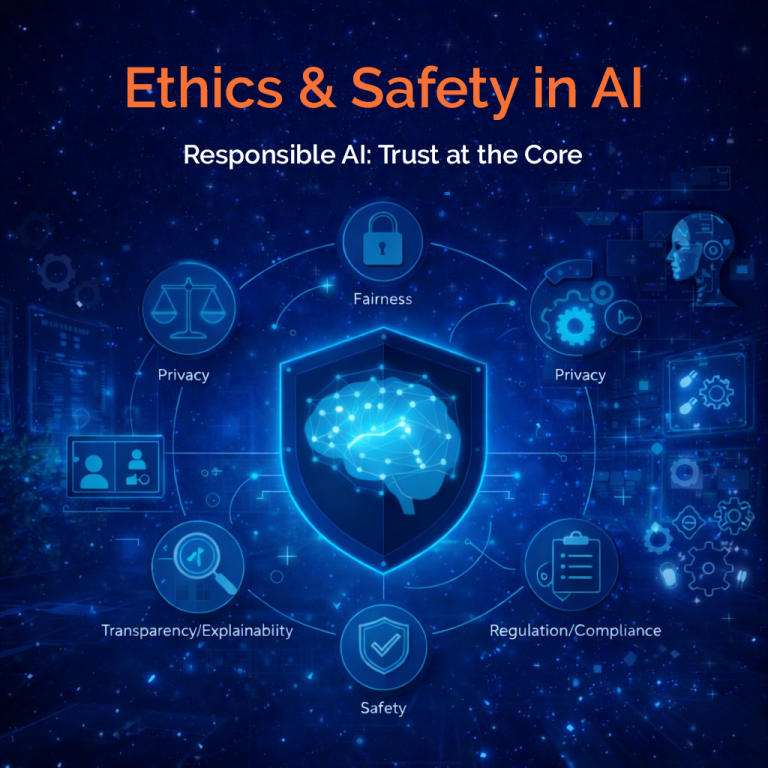

Yet, as the use of AI grows, several concerns about ethical and safety issues have arisen. These concerns relate to algorithmic bias, unfairness, data privacy issues, the potential for AI to be used irresponsibly, and the lack of regulation surrounding AI technology. Meeting and resolving these concerns are necessary for AI to continue to be widely trusted, embraced, used, and helpful to society.